The Ask AI automation action can be used to automate AI prompts and responses. A 'prompt' is sent to your configured AI Provider. The response is returned to an automation variable which can then be used further in your automation workflow. ThinkAutomation supports:

ChatGPT,

Azure OpenAI,

Grok or a local

OptimaGPT.

You can send single one-off prompts or prompts that are part of a conversation.

Before you can use this action you must setup an AI Provider in the ThinkAutomation Server Settings - AI Providers section.

See

OptimaGPT if you prefer to use an on-premises or private-cloud AI server.

From the AI Provider list, select one of your configured AI Providers.

Specify the Operation:

- Ask AI To Respond To A Prompt

- Add Context To A Conversation

- Clear Conversation Context

- Ask AI To Respond To A Prompt With An Image

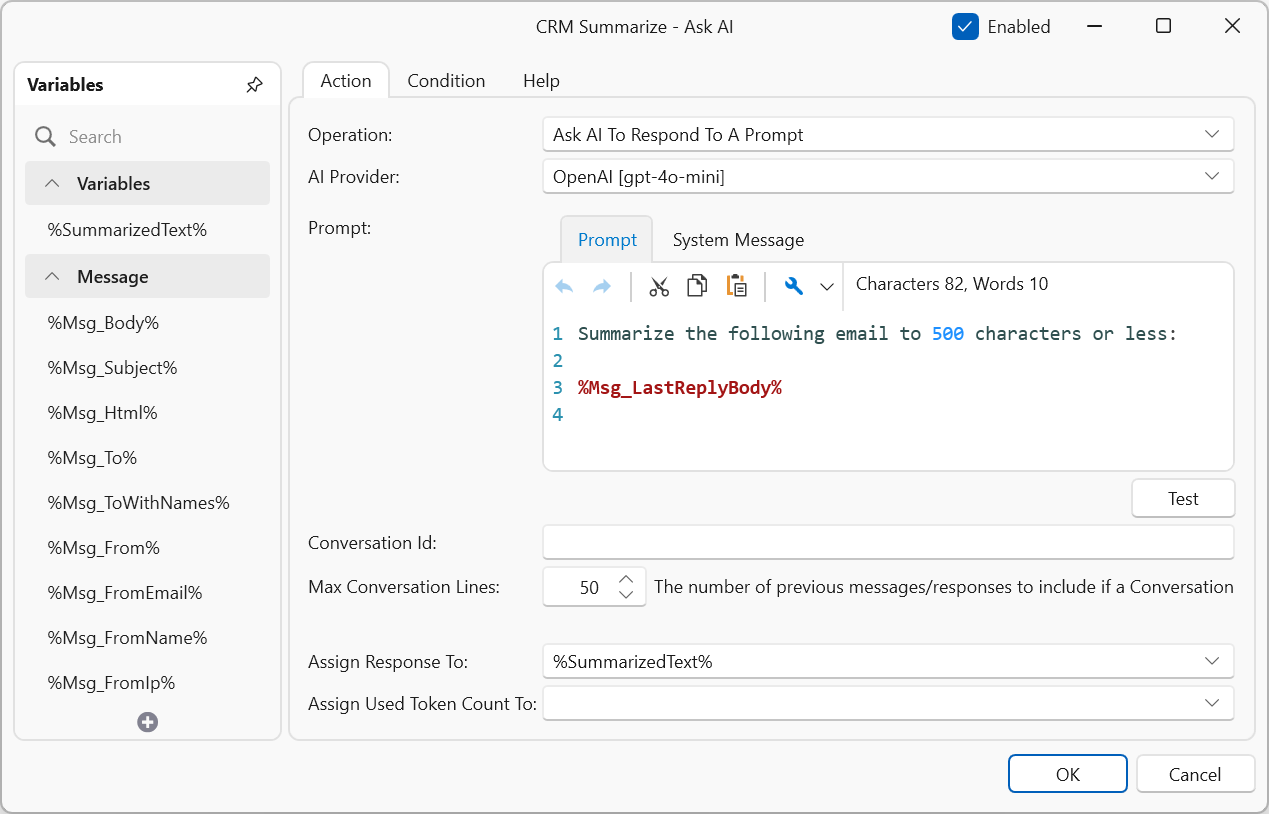

Ask AI To Respond To A Prompt

This operation is used to send a prompt to an AI. The response to the prompt can then be assigned to a variable which can then be used further in the automation workflow.

The System Message is optional. This can help to set the behavior of the assistant. For example: 'You are a helpful assistant'.

The Prompt is the text you want a response to. This can be any sort of AI prompt and can contain %variables%.

Examples:

What category is the email below? Is it sales, marketing, support or spam?

Respond with 'sales', 'marketing', 'support' or 'spam' only.

If it is a support email and it appears to be urgent, respond with 'support:urgent'.

Subject: %Msg_Subject%

%Msg_LastReplyBody%

Extract the name and mailing address from this email:

Dear Kelly,

It was great to talk to you at the seminar. I thought Jane's talk was quite good.

Thank you for the book. Here's my address 2111 Ash Lane, Crestview CA 92002

Best,

Maya

I am flying from Manchester (UK) to Orlando.

What are the airport codes? Respond with just the codes separated by comma.

Prompts have a limit of approximately 30000 words (depending on the Model).

Tip: When using AI to analyze incoming emails, you can use the %Msg_Digest% built-in variable instead of %Msg_Body%. The %Msg_Digest% variable contains the last reply text only, with all blank lines and extra whitespace removed. It is also trimmed to the first 750 characters. This is usually enough to categorize the text and will ensure the prompt does not exceed the token limit.

Tip: If you want to use AI to automatically respond to incoming emails you should use the %Msg_LastReplyBody% built-in variable instead of the %Msg_Body%. The %Msg_LastReplyBody% variable contains only the current reply, without any quoted text & previous replies. This ensures only the current message and not the entire email thread is sent.

Response

Specify the variable to receive the response from the Assign Response To list.

You can also optionally assign the number of tokens used for the prompt/response. Select the variable to receive the tokens used from the Assign Used Token Count To list. AI usage fees are based on tokens used.

Conversations

You can optionally specify a Conversation Id. This is useful if multiple AI requests will be made and you want to include previous prompts/responses for context, or if you want to add your own context prior to asking the AI for a response.

The Conversation Id can be any text. For example, setting it to %Msg_FromEmail% will link any requests for the same incoming email address. The built-in variable %Msg_ConversationId% can be used for the Conversation Id. This is a hash of the from/to addresses and subject.

The Max Conversation Lines entry controls the maximum number of previous prompts/response pairs that are included with each request. For example, if the Max Conversation Lines is set to 25 then the last (most recent) 25 prompt/response pairs will be sent prior to the current prompt. As the conversation grows, the oldest items will be removed to prevent the total prompt text going over the AI token limit.

Conversations are shared by all Automations within a Solution and conversation lines older than 48 hours are removed.

For example:

Suppose you send 'What is the capital city of France?' in one prompt and receive a response. If you then send another separate prompt of 'What is the population?' with the same conversation id then you will receive a correct response about the population of Paris because the AI already knows the context. This would work across multiple Automation executions for up to 48 hours, as long as the conversation id is the same.

Add Context To A Conversation

You can add context to a conversation. Context is used to help the AI give the correct answer to a question based on the context you have provided. This can be static text, or you can search articles related to the incoming message from the Embedded Knowledge Store and add the most relevant articles. Using the Ask AI action along with the Add Context operations enables you to create bots that can answer business specific questions - even when the AI itself has no knowledge of the subject matter.

Lookup Context From Knowledge Store

Using the Embedded Knowledge Store enables you to add text and documents (PDF, Word, HTML etc) as articles to a Knowledge Store collection. You can then search the knowledge store using the incoming message as the Search Text. This will return the Top x most relevant articles from the specified Knowledge Store Collection.

The Relevancy Threshold setting controls the relevancy level. Articles below the relevancy % will not be included. This value defaults to 20%.

The Return Max Tokens entry should be set to a value lower than the maximum tokens that the AI model supports. It is recommended to keep this at approximately 50% of the model maximum. For example, if you will be using the OpenAI ChatGPT gpt-3.5-turbo-16k model, then this allows 16384 tokens, so the Return Max Tokens entry should be no higher than 8192. This will ensure that there is enough tokens remaining for the prompt text and the AI response itself.

The returned articles will each be added as context, allowing the AI to use this information when responding to the incoming message.

If you want to add a specific article from a Knowledge Store collection, rather than relying on a search (for example, based on specific keywords found in the incoming message), use the Embedded Knowledge Store action to lookup a specific article (using the Get operation). Set the Return As to Json, assign the returned article to a %variable%, then add this variable to the Add Static Context tab.

Top Title/Tag

You can optionally assign the Top Title and/or Top Tag to variables. The first (most relevant) article Title and Tag will be assigned. This can be used further in your Automation. For example: Suppose we have a number of articles relating to 'pricing' or 'quotes' or 'sales'. We could tag all of these with a 'sales' tag. At the end of the Automation we could check the Top Tag %variable%. If this is equal to 'sales' we could send an email to the sales team informing them that someone has asked something sales related - we could send the question and AI response with this email.

Add Static Context

You can also add Static Context. This can be any text (which can contain %variable% replacements). This can be used to provide default context, for example:

You are a very enthusiastic representative working at {your company}.

Given the following sections from our documentation, answer the user's question using only that information, outputted in markdown format.

If you are unsure and the answer is not explicitly written in the documentation, say "Sorry, I don't know how to help with that."

You should always add some default context, this should be used to tell the AI who and what it is and how it should respond. You can also provide some general information about your business.

For email responder bots you can use default context such as:

Your name is '{bot name}' and you are a very enthusiastic representative working at {your company} answering emails. Given the provided sections from our documentation, answer the question using only that information, outputted in markdown format.

If an answer cannot be found in the information provided, respond with 'I cannot help with that' only.

Do not try and answer the question if the information is not provided.

Add a friendly greeting and sign off message to your response. Your email address is '{bot email address}'.

My email address is %Msg_FromEmail%

This tells the AI that it's responding to emails and to include a greeting and sign off message with its response.

If the Required option is enabled then the context text will remain in the conversation. This option can be used to ensure that the default or important context is always part of the conversation.

You could also lookup static context via a database or web lookup. For example: If the customer provides an email at the start of the chat, or you are responding to incoming emails, you could lookup customer & accounting/order information and add this to the context in case the customer asks about outstanding orders. Or you could lookup current service status if the user wants live status information.

Regardless of how the context is added, the same context wont be added to a conversation if the conversation already has it. So you can add standard context (for example, general information about your business) along with searched for context within your Automation prior to asking the AI for a response.

You can add multiple Ask AI - Add Context To A Conversation actions in your Automation prior to the Ask AI - Ask AI To Respond To A Prompt action.

For example: Suppose you have a company chat bot on your website using the Web Chat message source. A user asks 'What are the benefits of widgets, and can you tell me the current price?'. You first add default context, you then do a knowledge base search with the Search Text set to the incoming question. This adds the most relevant articles relating to widgets to the conversation as context. If the incoming message contains 'widgets', you could then do a database lookup to get the current price for Widgets and add 'The current price for Widgets is %Price%' as static context. AI will then be able to answer the user's questions from the context you provided.

The context itself does not appear in the chat, it is only added to the prompt sent to the AI to provide context to help the AI answer that specific question. The benefit of this is that you can use the standard AI models without training - and you can provide up to date context by keeping your local knowledge base updated or looking up context from your own database. It allows you to quickly create working bots, maintain up-to-date information, and harness the full potential of AI while maintaining control over their data and data privacy.

Note: ChatGPT has a limit of 128,000 tokens per request. Typically a token corresponds to about 4 characters of text. Prompt text includes any context added and the response from ChatGPT itself. ThinkAutomation automatically removes older context to ensure the token limit is not exceeded. If you add too much context, some of it may not be included.

Adding Tabular Context

You can add tabular context to a conversation. A user can then ask questions relating to the data.

For example, you could lookup invoices for a customer (based on the email address provided) from a database and return the data in CSV format:

invoice_number,invoice_date,product,amount_due

INV-2023351,2023-01-05,Plain Widgets,1500.00

INV-2023387,2023-01-10,Orange Niblets,2500.00

INV-2023421,2023-01-15,Flat Widgets,1800.00

INV-2023479,2023-01-20,Flat Widgets,3500.00

INV-2023521,2023-01-25,Round Niblets,1200.00

You would assign the CSV data to a %variable% and then add Static Context:

Given the following list of invoices in CSV format for the user, answer questions about this data. The 'amount_due' column gives the outstanding balance for the invoice in dollars.

%CSVData%

The chat user could then ask questions such as:

What is the date of my most recent invoice? or Can you show me a list of my invoices?

When adding context as tabular data, you need to proceed the data with a clear instruction of what the data is. You would need to experiment with the prompt text to ensure the AI responds correctly.

Clear Conversation Context

This operation will clear any Context added to a conversation. Specify the Conversation Id.

Ask AI To Respond To A Prompt With An Image

This operation can be used to ask a question about an image. The image can be a local file or a URL. The System Message is optional. This can help to set the behavior of the assistant. For example: 'You are a helpful assistant'.

The Prompt is the text is the question you want to ask about the provided image.

In the Image Path Or URL entry, specify a local file path or URL for the image. You can use %variable% replacements. The following image types are supported: PNG, JPEG, WEBP & GIF.

Select the variable to receive the response from the Assign Response To list.

Examples:

You can ask general questions about the image, such as 'What is in this image?' or 'Is this image an animal?'. You can also perform OCR, for example: 'Convert the image to text.' or 'The image is a receipt. What is the total paid and the tax?'.

Rate Limits

Your AI Provider will set a rate limit for the maximum requests per minute. ThinkAutomation will retry the request if a rate limit error is returned. It will automatically increase the wait time for each retry. The default wait period is 30 seconds. If the request still fails after the retries then an error will be raised.

If your Ask AI action is timing out then your AI account may be rate limited. For OpenAI, you can increase the rate limit by adding pre-payment funds to your OpenAI account. This will move your account to then next usage tier. For example, adding a $50 pre-payment will move your account to usage tier 2 - which will have a higher rate limit and faster responses. See: OpenAI Rate Limits for more information.

AI Automation Use Cases

Using AI with ThinkAutomation has many uses. Other than being a regular chat bot that has knowledge of many subjects, you can use it to:

- Create a Chat Bot using the Web Chat Message Source type that can answer specific questions about your business by adding private context using the Embedded Knowledge Store.

- Provide automated responses to incoming emails or SMS messages, utilizing the Embedded Knowledge Store.

- Create a Teams Message Source type so that Microsoft Teams users can ask your bot questions.

- Create a Chat Bot Automation that uses the API Message Source type. This can be the same type of Automation that you would use for the Web Chat Message Source type - but called via the API. This would allow you to use ThinkAutomation from your own chat web app or external chat apps/products.

- Parse unstructured text and extract key information.

- Summarize text.

- Anonymize text.

- Classify text.

- Translate text.

- Sentiment analysis.

- Correct grammar/spelling.

- Convert natural language into code (SQL, PowerShell etc) and then use the response to perform a database operation.

- Ask questions about images or extract text from images.

and much more. See: Examples - OpenAI

Popular Workflows

Popular Workflows

AI Workflows

AI Workflows