ThinkAutomation offers a vast automation workstation kitted with hundreds of tools. If you haven’t got the time to build out your own custom workflows, or lack experience in using the tools, then we can help.

Setup support

You might already have a great tech team, who just need an overview on ThinkAutomation usage. In these instances, we can put our expert automation technicians at your disposal.

Our team has deep technical and industry knowledge, and can show you how to implement ThinkAutomation in accordance with best practices.

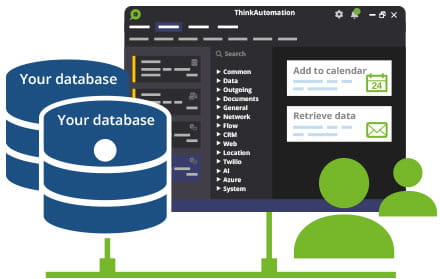

Custom configuration

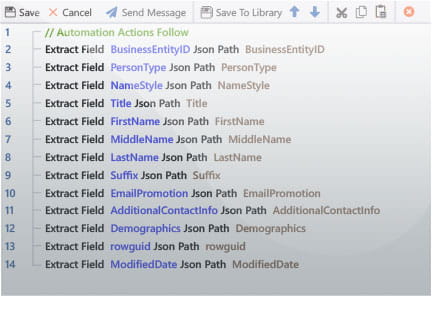

If you’d prefer, we can install and configure ThinkAutomation on your behalf. This setup is shaped around your needs, with custom workflows that meet your specific automation objectives.

During setup, we can map ThinkAutomation to your database tables, integrate with your systems and connect with your required APIs – all while building the rules that will automate your processes.

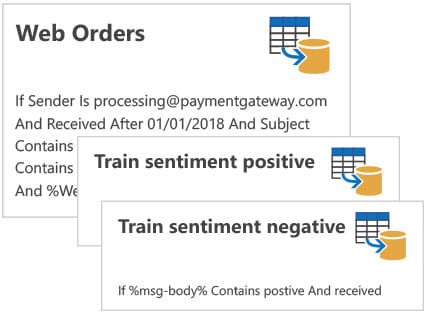

Automation testing

We don’t just build – we test along every step of the way. This includes testing account message retrieval, testing trigger conditions and testing trigger actions.

Importantly, we complete this automation testing before deploying to your production servers. You’re guaranteed success from the first day of installation.

Documentation

Once ThinkAutomation is configured, it will do its job silently and uninterrupted in the background. But if you’d like to experiment with more workflows, we can provide you with help documentation.

This can be customised to your specific business and setup, including an overview of your existing deployment and checklists for workflow creation.